Amazon Redshift — Concurrency Scaling Feature

Background

Motivation

Innovid is the largest independent global video advertising platform that empowers brands, agencies, and publishers to customize and deliver high-performance advertising across all screens, from mobile devices to connected TV.

Innovid processes more than 5 billion events per day. The basic processing is carried out by a Spark EMR cluster followed by the application of a business logic layer, which validates and applies some business logic into this raw data. This mission-critical business logic layer is implemented on Amazon Redshift, which aggregates the raw data and slices it into various forms of information. We believe that many other companies in our industry or in other industries follow the same approach when dealing with big data on a continuous basis. The analyzed and aggregated data is also copied from Amazon Redshift to Amazon Aurora MySQL and then pulled from Aurora to external customers either by dashboards or offline reports.

The amount of data we are receiving and processing on a daily basis, along with the number of campaigns Innovid is managing, has grown significantly. Our clients need more reports with even more access to raw, unaggregated data. These analytical reports are complex and can’t be executed on OLTP systems effectively. We created two Workload Management (WLM) queues in Amazon Redshift. It was done to ensure that offline reporting won’t affect the actual production data processing and cause a delay in our data readiness.

Amazon Redshift has been serving us well since the preview of the service in 2013 and has become more performant year-over-year. We still want to onboard more workloads without having to manage a fleet of clusters ourselves, and can’t compromise with degraded performance or queries waiting in queue for our mission-critical applications. Speed matters.

The Options

We evaluated several options including relying more on Aurora, cloning our Amazon Redshift cluster and competitive offerings from Snowflake. First and foremost the chosen solution would have to provide great performance, which we cannot compromise on, but it also needs to be easy to use and cost effective. Unpredictable cost, even if our workload isn’t predictable, isn’t an option for us as a company that aspires to provide the best value and lowest cost to our customers.

Aurora

We are already using Aurora as part of our database arsenal at Innovid — and we love it. We have 4 different Aurora clusters across various data centers. Aurora scales well, works fast and with its ability to scale up to 16 readers it can handle lots of requests.

While Aurora can handle up to 64GB data storage, it cannot query Amazon S3 files. We store data in Amazon S3 Parquet files and query them using Amazon Redshift Spectrum. Redshift Spectrum is heavily used by our developers and support team. As mentioned before, some of our heaviest reports are generated against data in Amazon S3, so being able to query Amazon S3 is a mandatory requirement.

Cloned Amazon Redshift Cluster

Another option we discussed was to clone our production cluster to a new cluster and use the new cluster for reporting and dashboard purposes. This solution prevents dashboard and report generation from affecting our main production processes. It will soon hit again the 50 concurrent queries limitation. This option was quickly taken off the table.

Snowflake or Other 3rd Party Databases

As a technology company, we always evaluate additional solutions. We learned about Snowflake as a technology that can handle a large number of concurrent requests.

This solution, not being native to AWS since it’s a 3rd party offering, would have taken us to a new unexplored path. It could take us a long time to implement and incorporate into our evolved stack on AWS, and was therefore considered risky. We would know how it starts but not how it ends and when.

As mentioned above, we’re looking for performance, ease of use and low cost. Unpredictable cost, not being able to query data directly in Amazon S3 as part of our data lake strategy, and lack of integration with AWS services made this option less relevant for us.

The Surprise

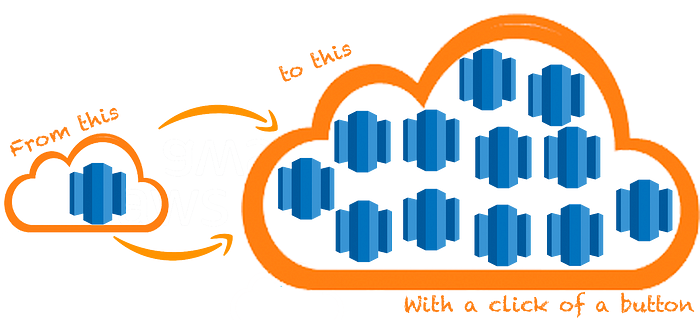

None of the options above looked like a complete solution that we could achieve in a timely manner. Then, unexpectedly, one of Innovid’s AWS Technical Account Managers informed us of a new feature being added to Redshift. This feature is called “Concurrency Scaling” and it allows the scaling of our Amazon Redshift cluster to run a high number of queries concurrently. Bingo! Being able to open our production Redshift cluster for querying by many applications is exactly what we were looking for. It seems like AWS predicted our needs and began fulfilling them before we even realized them ourselves.

Benchmarking

We simulated common real-world use cases as well as synthetic benchmarking to understand the impact of the new Concurrency Scaling feature. In order to achieve this, we compiled three types of queries:

- Short running queries, that take less than 100 milliseconds to complete

- Medium running queries, that may take several minutes to complete

- Long running queries, which take 40–60 minutes to complete

We ensured that Amazon Redshift is not using any cache by changing the query we ran with every execution.

We created a test application that gets three parameters at runtime: which type of query to run, how many concurrent queries we would like to have and the test duration. At the end of each test session we generated the output for:

- Number of successful runs

- Number of errors

- Mean running time

- Standard deviation of the running time

- 90th percentile

We benchmarked several parameters related to the Workload Management (WLM) section of the cluster:

- Concurrency Scaling mode

- Short Query Acceleration (SQA)

- WLM Query Concurrency

- Memory allocation between different WLM queues

To generate the demanding workload for our Amazon Redshift cluster, we used a m5.4xlarge test machine, located in the US East Region. It had a low CPU utilization during the entire testing period.

We ran more than 40 tests with various configurations, but for the sake of readability, we’re about to highlight only a few that represent our findings well.

Test Cases

Test 1: Long running queries. 3 test processes with 100 concurrent requests each.

Test 2: Medium running queries. 3 test processes with 100 concurrent requests each.

Test 3: Short running queries. 3 test processes with 100 concurrent requests each.

Real-Life Scenario

In this test, we wanted to simulate a scenario that mimics a real-life use case. We wanted to check the cluster’s throughput in mixed workload, a mix of long and short queries, running in parallel, competing for the same computational resources.

The above chart tells the whole story of the new Concurrency Scaling feature: the ability to run thousands of our batch processing BI queries and in parallel to that serve hundreds of thousands of short queries. The number of short queries is above any expectation and regular usage of this cluster and this ensures we will be able to run them without having them impact our on-going backend process. Those were great news for us.

This is an enormous number of query processing throughput that is a milestone for any analytics system. From the results above we saw that the scenarios that improved the most were those that mixed long queries with short queries. Running those scenarios showed that when the Concurrency Scaling feature is on (Auto), we will be able to serve a large number of short queries — more than 177,000 queries in one hour — and at the same time execute around 2900 long running queries. This number of long queries and associated throughput is 11 times(!!) higher than what we would have achieved if we had to run them on a regular, single Amazon Redshift cluster.

Conclusion

Based on the above results, we chose again to work with Amazon Redshift. We have been working with Amazon Redshift for more than 5 years and find it well suited to our needs and more.

AWS’s pricing plan for the Concurrency Scaling feature allows us to predict our data analytics costs while keeping it within budget. Amazon Redshift provides one hour of free concurrency scaling credit for every 24 hours that the main cluster is running. This maintains low variation in the month-to-month cost.

Additionally, the fact that with Amazon Redshift we access our data lake easily on Amazon S3 (Parquet) files without applying any change, and by storing our data in open formats in the Amazon S3 data lake, we have the maximal flexibility in analyzing our data. Those are major factors that support our decision to use Amazon Redshift every day.

The new feature of Concurrency Scaling allows us to expand our analytics capabilities and offer new data streams that we couldn’t think of before. We can now generate reports on our data lake without affecting our main processing. Not only can we run more queries on our Amazon Redshift cluster, but also the performance of each query is even better.

We find a perfect fit between our ever-growing need to process more and more data and Amazon Redshift’s Concurrency Scaling ability to meet this demand without additional overhead whatsoever.

By Adam Sharvit, Ofer Buchnik— Innovid